The Beginner Programmer

Shared thoughts, experiments, simulations and simple ideas with Python, R and other languages

Monday, 23 December 2019

Electromagnetic forces between busbars

Saturday, 19 October 2019

Shaded pole motor magnetic field simulation in FEMM

Shaded pole motors are a variety of induction motors. They are quite the cheap type of motors: you can usually find them in old washing machines (powering the water pump for example) or even in new white goods where they may be used for periodic (but not frequent) tasks, for instance every 30s for turning a wheel. It is extremely rare to find a shaded pole motor that runs continuously. In the picture below (from Wikipedia) you can find an example of such motors.

Thursday, 16 August 2018

Linear programming in R

Linear programming is a technique to solve optimization problems whose constraints and outcome are represented by linear relationships.

Simply put, linear programming allows to solve problems of the following kind:

- Maximize/minimize $\hat C^T \hat X$

- Under the constraint $\hat A \hat X \leq \hat B$

- And the constraint $\hat X \geq 0$

Automate your garden lights DIY style: getting practical!

This post is a follow up of this project.

After a lot of fiddling around, I finally built up the circuit for automating the turn on and off of my garden lights. It was about time wasn’it? Yeah, I know, it took me some time, but it was worth it since I think the end result is particularly nice and I enjoyed the process of building the circuit.

Goals of this project

The objectives of this DIY project are the following:

- Automate the turn on and off of garden lights: 4 LED lights powered from a battery which is recharged every day through an appropriately sized solar panel. The lights should turn on in the evening and turn off in the morning. Ideally turn on and turn off should be adjustable with ambient light.

- Improve my basic knowledge on how to design a proper circuit, a PCB, source components and debug analog circuitry.

- Keep the project relatively cheap.

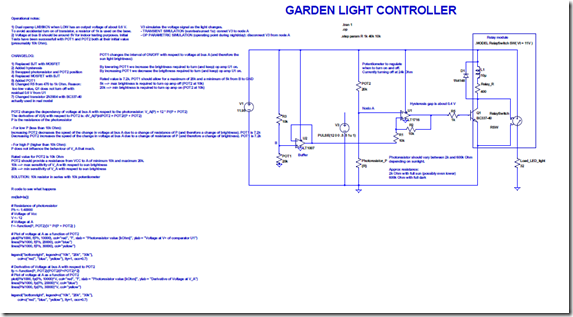

The circuit in LTSpice

Below you can find a picture of the circuit simulated in LTSpice with all my notes following testing and a close up of the circuit.

Monday, 13 August 2018

PCA revisited: using principal components for classification of faces

This is a short post following the previous one (PCA revisited).

In this post I’m going to apply PCA to a toy problem: the classification of faces. Again I’ll be working on the Olivetti faces dataset. Please visit the previous post PCA revisited to read how to download it.

The goal of this post is to fit a simple classification model to predict, given an image, the label to which it belongs. I’m goint to fit two support vector machine models and then compare their accuracy.

The

- The first model uses as input data the raw (scaled) pixels (all 4096 of them). Let’s call this model “the data model”.

- The second model uses as input data only some principal components. Let’s call this model “the PCA model”.

Sunday, 12 August 2018

PCA revisited

Principal component analysis (PCA) is a dimensionality reduction technique which might come handy when building a predictive model or in the exploratory phase of your data analysis. It is often the case that when it is most handy you might have forgot it exists but let’s neglect this aspect for now ;)

I decided to write this post mainly for two reasons:

- I had to make order in my mind about the terminology used and complain about a few things.

- I wanted to try to use PCA in a meaningful example.

Friday, 10 August 2018

IV characteristics of diodes

This week I finally got to play around a little with some electronic components I ordered and test them before using them on side projects. My goal was to find the IV curve of a 3V 0.5W zener diode I need to use on a project. Since I had all the instruments set up, as a bonus I decided to find the IV curve of a blue LED as well.

Test circuit and instruments setup.

The test circuit I used for the measurements is the following

Sunday, 4 March 2018

Calculating the DFT in C++

When you learn about the Fourier transform and what it can show you about a signal, you immediately start thinking about its possible applications. The Fourier transform, however, deals with continuous time signals while, in practice, computers deal with discrete time signals (i.e. a sampled version of the original continuous time signal). When it comes to discrete time signal, you can calculate a discrete Fourier transform to get the frequency content of the signal.

Monday, 18 September 2017

Putting some of my Python knowledge to a good use: a Reddit reading bot!

One of the perks of knowing a programming language is that you can build your own tools and applications. Depending on what you need, it may even be a fast process since you usually do not need to write production grade code and a detailed documentation (although it might still be helpful in the future).

I’ve got used to read news stuff on Reddit, however, it sometimes can be a bit time consuming since it tends to keep you wandering through every and each rabbit hole that pops up. This is fine if you are commuting and have just some spare time to spend on browsing the web but sometimes I just need a quick glance at what’s new and relevant to my interests.

In order to automate this search process, I’ve written a bot that takes as input a list of subreddits, a list of keywords and flags and browses each subreddit looking for the given keywords.

If a keyword is found inside either the body or in the title of a post which has been submitted in one of the selected subreddits, the post title and the links are either printed in the console or saved in a file (in this case the file name must be supplied when starting the search).

The bot is written using praw.

Sunday, 3 September 2017

Let Python do the job for you: AutoCAD drawings printing bot

Recently I’ve been getting familiar with AutoCAD and at the same time I’m trying to improve my Python skills. Odd mix, huh?!

While trying to improve my Python skills I thought I could exercise myself on automating boring tasks. I remembered that a few years ago I was given a boring job which involved printing a lot of drawings directly in PDF format from Autocad. I was too lazy to print the drawings one by one and I knew the process could be automated. At the time I put together a Python script that did the job fine, but it was a bit messy. I thought I could make an improved version.

When nice APIs are not available, such as in the case of AutoCAD (at least that was the case a few years ago, nowdays things may have changed), using Pyautogui may help in the task of automating boring tasks.

Saturday, 2 September 2017

How would you make a very simple and rotating magnetic field starting from a three phase power supply?

Recently I had a brilliant idea :D why not make a rotating magnetic field that can rotate a needle of a compass? (or any other magnetic needle for that matter).

Ok but the design must be very basic. One possible option would be the following:

Simulation of the electric field of a three phase cable using FEMM

If you think of a simple cable, with a copper core and a PVC insulation, calculating the electric field generated in the insulation layer using pen and paper may still be feasible, however, using FEMM greatly improves your life when doing calculations as an engineer, student, or “just” curious person.

This simple cable can be modelled using FEMM as follows

The cable has a total diameter of 7 cm, with a first PVC insulation with a radius of 2.6 cm, a 0.05cm air gap and another PVC insulation layer.

Friday, 1 September 2017

Magnetics simulation with FEMM

FEMM stands for Finite Element Method Magnetics, and it is a nice software for solving magnetics and electrostatics problems.

I’ve known FEMM for at least a couple of years but I’ve never tried it out and used it at its full power! Now the time has come to do that!

In this post I’m going to present the results of the following simulations:

- A C shaped electromagnet (detailed results).

- The magnetic field of the rotor of a 4 poles synchronous machine (brief overview).

Wednesday, 30 August 2017

Another typical control problem: balancing a ball on a beam

Balancing a ball on a beam is not a common problem you may face during your everyday life, however, this simple example of engineering can be extended to more complex problems and, in general, to other more interesting control problems such as

- Control of temperature in a room

- Control of robots

- Control of automated cars

- Control of industrial processes

The main problem tackled in this article is the design and implementation of a basic PID based controller to control the position of a ping pong ball on a beam. Ideally the controller should be able to set the position to whatever value of the x axis the user decides to apply. This is a simple scheme of the physical system

Modelling a DC motor using LTspice, Simulink and Matlab

Electrically speaking, a permanent magnet DC motor can be modelled as follows:

applying LKT we obtain the following differential equation

$$v = Ri+L\frac{di}{dt}+e$$

where $R$ is the equivalent resistance of the brushes plus the windings, $L$ is the inductance as seen from the external terminals of the motor and $e$ is the back EMF. Usually R is very small and can be difficult to measure with a multimeter. The back EMF can be expressed as a function of the speed of the motor $e = k\phi\omega$.