This is a short post following the previous one (PCA revisited).

In this post I’m going to apply PCA to a toy problem: the classification of faces. Again I’ll be working on the Olivetti faces dataset. Please visit the previous post PCA revisited to read how to download it.

The goal of this post is to fit a simple classification model to predict, given an image, the label to which it belongs. I’m goint to fit two support vector machine models and then compare their accuracy.

The

- The first model uses as input data the raw (scaled) pixels (all 4096 of them). Let’s call this model “the data model”.

- The second model uses as input data only some principal components. Let’s call this model “the PCA model”.

This will not be an in-depth and detailed fit and subsequent evaluation of the model, but rather a simple proof of concept to prove that a limited number of principal components can be used to perform a classification task instead of using the actual data.

The setup of the two models

In order to get an idea of what accuracy our models can achieve, I decided to do a simple 20 fold crossvalidation of each model.

The data model is a simple support vector machine that is fitted using all the faces in the training set: it’s input data is a vector of 4096 components.

The PCA model is again the same support vector machine (with the same hyperparameters, which however may need some tweaking) fitted using 30 PCs.

By performing PCA on the dataset I transformed the data and, according to the analysis, 30 PCs account for about 82% of the total variance in the dataset. Indeed you can see from the plots below that the first 30 eigenvalues are those with the highest magnitude.

Results

After running the two crossvalidations on the two different models, the main results I found are the following:

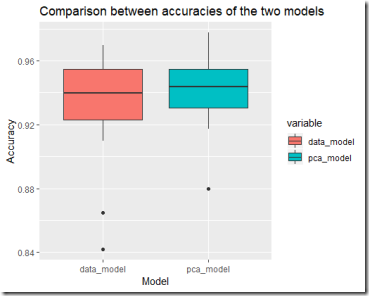

- The average accuracy of the two models is about the same (PCA model has a slightly better average accuracy): 93% vs 94%. Standard deviation seems to be again in favour of the PCA model: 3.2% vs 2.3%. The accuracy isn’t great, I obtained 98% on this dataset using other models, but of course this is just a test “out of the box” with no hyperparameter tuning and all the other fine tuning operations.

- The second model fits the data much faster than the first one.

The results are summarised in the boxplot below

Note that the PCA model has less information available compared to the model using the original data. However, the information is condensed in a fewer variables (the 30 PCs) in a more compact way. This technique is great when you are using models sensitive to correlation in the data: since the PCs are not correlated, you can transform your original data (which might be correlated and causing problems in the model) and use the principal components instead.

Another nice advantage is that since the PCA model uses fewer variables it is faster. Note that I decided to apply PCA to the whole dataset and not only to the training set of each round of crossvalidation. It could have been argued that in practice you have access to only the training set (of course). Then I used the eigenvectors to obtain the PCs for both the training and testing set of each crossvalidation iteration.

The code I used is here below

Thanks for your great and helpful presentation I like your good service. I always appreciate your post. That is very interesting I love reading and I am always searching for informative information like this.AngularJS Training in Chennai | Best AngularJS Training Institute in Chennai|

ReplyDeleteVery nice post here thanks for it .I always like and such a super contents of these post.Excellent and very cool idea and great content of different kinds of the valuable information's.

ReplyDeleteartificial intelligence and machine learning course in chennai

machine learning with python course in Chennai

machine learning certification in chennai

Android training in chennai

PMP training in chennai

Situs Poker Terbaik dan Terpercaya...

ReplyDeleteSilakan kunjungi situs kami

https://www.hobiqq.pw

BandarQ

BandarQ Online

Agen BandarQ

BandarQ Indonesia

Agen BandarQ Indonesia

Agen BandarQ Online Indonesia

Baca juga :

ReplyDeleteSitus Artikel Terbaik di Indonesia

It is quite evident as we look at the website that the designer has really given good time on the website also the content of the website has been written after proper research.How to Setup AOL Mail on Mac? | Use a mobile device to watch Netflix on TV

ReplyDeleteI was worried about this topic but after reading this post I am really much satisfied as all information given in this post is reliable.How to enable IMAP in Gmail?

ReplyDeleteGreat explanation.. Thanks

ReplyDeleteInternship providing companies in chennai | Where to do internship | internship opportunities in Chennai | internship offer letter | What internship should i do | How internship works | how many internships should i do ? | internship and inplant training difference | internship guidelines for students | why internship is necessary

Are you looking for a digital marketing company in Melbourne? We handle SEO, Google AdWords, social media, and email marketing to boost your online presence. Our team helps you reach more customers and grow your business quickly.

ReplyDeleteJoin the top Digital Marketing Course in Nirman Vihar, Delhi! Learn 25+ modules, get live project training, expert guidance, and placement assistance. Enroll now and start your digital marketing career today.

ReplyDelete