In general, computer programs are believed to be bad at being creative and doing tasks which require “a human mind”. Dealing with the meaning of text, words and sentences is one of these tasks. That’s not always the case. For instance sentiment analysis is a branch of Machine Learning where computer programs try to convey the overall feeling coming from tons of articles.

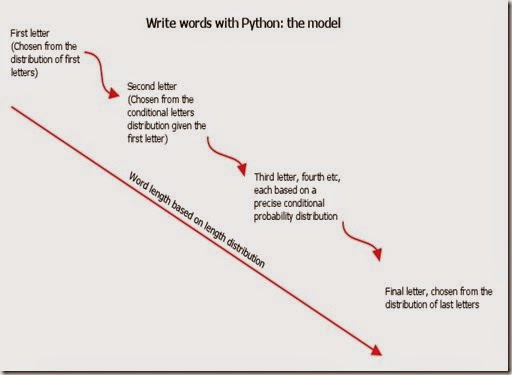

But here we are talking a lot more simpler: by using Markov chains and some statistics, I developed a simple computer program which generates words. The model works as follow:

As a first step, the program is fed with a long text in the selected language. The longer the text, the better. Next, the program analyses each word and gets the probability distributions for the following:

-Length of the word

-First character (or letter)

-Character (or letter) next to a given one

-Last character (or letter)

Then, once gathered all this data, the following function is run in order to generate words:

Each part is then glued together and returned by the function.

On average, 10% of generated words are English (or French, German or Italian) real words or prepositions or some kind of real sequence of characters.

The most interesting aspect, in my opinion, is the fact that by shifting the language of the text fed into the program, one can clearly see how the sequences of characters change dramatically, for instance, by feeding English, the program will be more likely to write “th” in words, by using German words will often contain “ge” and by using Italian words will often end by a vowel.

Here is the code. Note that in order to speed up the word checking process, I had to use the NLTK package which is availably only in Python 2. To avoid the use of Python 2 you could check each word using urllib and an online dictionary by parsing the web page but this way is tediously slow. It would take about 15 minutes to check 1000 words. By using NLTK you can speed up the checking process.

Hope this was interesting.

Buy Speakers at Cheapest Price with Amazing Quality

ReplyDeletehttps://seventysevenstyle.com/collections/wireless-products/products/t90-5-in-1-4d-wireless-bluetooth-speaker-with-dancing-5-led-lights