Among R deep learning packages, MXNet is my favourite one. Why you may ask? Well I can’t really say why this time. It feels relatively simple, maybe because at first sight its workflow looks similar to the one used by Keras, maybe because it was my first package for deep learning in R or maybe because it works very good with little effort, who knows.

MXNet is not available on CRAN but it can be easily installed either by using precompiled binaries or by building the package from scratch. I decided to install the GPU version but for some reason my GPU did not want to collaborate so I installed the CPU one that should be fine for what I plan on doing.

As a first experiment with this package, I decided to try and complete some image recognition tasks. However, the first big problem is where to find images to work on, while at the same time not using the same old boring datasets. ImageNet is the correct answer! It provides a collection of URLs to images publicly available that can be downloaded easily using a simple R or Python script.

I decided to build a model to classify images of dogs and plants, therefore I downloaded about 1500 images of dogs and plants (mainly flowers).

Preprocessing the data

Here comes the first problem. Images have different sizes, as expected. R has a nice package for working with images: EBImage. I’ve been using it a lot lately to manipulate images. Scaling rectangular shape images to square images is not ideal, but a deep convolutional neural network should be able to deal with it and since this is just a quick exercise I think this solution can be ok.

I decided to resize the images to 28x28 pixel and turn them into greyscale. I could have also kept the RGB. I tried to use 64x64 pixel images but R refused to run smoothly so I had to go back to 28x28. In order to resize all the images at once, I wrote this quick R script. It is very customizable.

After having preprocessed the images, they need to be stored in a proper format in order to use them to train a model. Greyscale images are basically a two dimensional matrix so they can be easily stored in a flattened array (or more simply, a vector).

Of course it would be better to make different train and test split but it can be done later when cross validating the model.

Building and testing the model

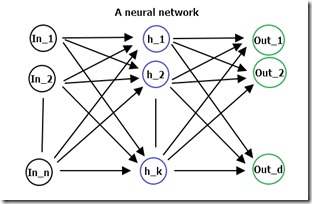

Now the data is a usable format. Let’s go on and build the model. I’ll use 2 convolutional layers and 2 fully connected layers.

The best accuracy score I got on the test set was of 74%. I’ve tested different parameters however it was not so easy to get them right. Other activation functions did not get a good results and caused some training problems. After 30 iteration, the model starts to overfit very badly and you get significant drops in accuracy on the test set.

Conclusions

74% is not one of the best scores in image recognition tasks, but I believe it is, globally at least, a good result for the following reasons:

- Train and test datasets were very small, 1500 samples is not that much.

- This score is still marginally better than the one I obtained using a random forest model.

- The images were squashed and stretched to be 28x28 pixels, they each showed very different subjects in different positions (dogs images), not to mention the fact that some had pretty noisy watermarks.

- I got to play around a lot with the hyperparameters of the net.

For sure there is room for improvement, namely using tensorflow and a slightly different model I achieved close to 80% Accuracy on the same test set. But this model took me less time than tensorflow to build and run.

Sorry about the trivial question, but in the below line of code:

ReplyDeletepredicted_labels <- max.col(t(predict_probs)) - 1

why are we subtracting by 1 ? could you please explain this ?

Hi, the 1 is subtracted since R, contrary to most programming languages, starts its array/vector indeces from 1 instead of 0.

DeleteThis comment has been removed by the author.

ReplyDeleteHi, so for my final table all my predicted labels are marked as 1, why is that? i guess that is what preventing from the final result be similar to yours

ReplyDeleteHi, Thanks a lot for sharing this, please help with the dataset require to replicate this i.e. dogs_resized ..

ReplyDeleteHi Mic,

ReplyDeletethanks a lot, your post is easy to follow and understand...referring to you last statement, can you share the detail in similar manner on tensor flow model you developed, please!

Hi kpankaj, thanks for your kind comment! This post is a bit old, a few changes have happened to my hard drive and I am afraid the tensorflow model has been lost somewhere in the backups I made (unless I have already posted it). However, I've got something better, if you'd like to learn the basics of Tenorflow, just follow Sentdex's videos, he's very good at explaining this stuff, I'd recommend him to everyone who starts out. Here's the link https://youtu.be/oYbVFhK_olY?list=PLQVvvaa0QuDfKTOs3Keq_kaG2P55YRn5v

Deletesir its help me alot.... i search a lot of websites for help but its not easy to take such code but your was perfect job specially for students.. who cant paid for to get code...

ReplyDeletesir kindly can give me the code for such data in which we have 2 or more obejcts in one picture and then identify object??

There are a lot of good tutorials online on the topic you need, try to search on YouTube as well.

DeleteHi can you please tell me what u do in this step?

ReplyDeletedim(test_array) <- c(28, 28, 1, ncol(test_x))

In this step I'm telling R that the data is made by ncol(test_x) samples of 28x28 pixel (or datapoints) images with 1 channel (since they are gray scale images, if they were RGB then this 1 would have been a 3)

DeleteGood Post! Thank you so much for sharing this post, it was so good to read and useful to improve my knowledge as updated one, keep blogging

ReplyDeleteBest Machine Learning institute in Chennai | machine learning with python course in chennai | best training institute for machine learning