After some posts on correlation (How to build a correlation matrix in Python) and variance (How to build a variance-covariance matrix in Python) today I’m posting an example of application: portfolio VaR. Please before you continue reading the article, make sure to read and understand the disclaimer at the bottom of the page. Thank you.

Portfolio VaR is the natural extension of the VaR risk indicator to a portfolio of stocks. The implementation is a little bit harder than the one or the two stock version since it involves calculations with matrices. If you are interested to get a first grasp on VaR you can check my first implementation in R here.

In this script I try to estimate parametric VaR of a portfolio of stocks using historical data.

A brief outline of the process I followed:

1)First I downloaded data from Quandl (they are a great source of free data by the way), then I reshaped the data for each stock into a .csv file to make it resemble the following pattern:

ticker,average return, standard deviation,initial price, R1,R2,R3,…,Rn

where Ri stands for Rth return and initial price is the most recent price.

For my analysis I used simple returns calculation, however it should be more appropriate to use logarithmic returns if possible. Next time I’ll probably be using log returns.

2) The script contains 9 functions:

2 functions are the “builder functions” (fillDicts and runSimulation) and they are used to load the data into the program

2 functions (correlationMatrix and varCovarMatrix) are auxiliary functions since they are used in the VaR function to estimate VaR.

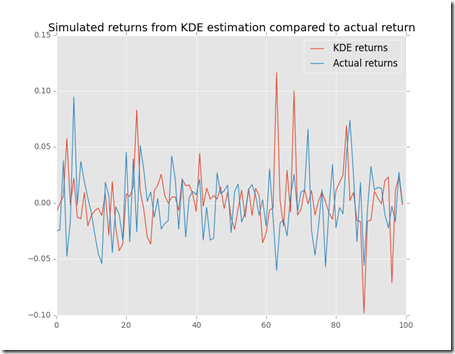

A plot function has been added to plot (if needed) the simulation of future returns by the runSimulation function. The model used to simulate future prices is fairly simple and can be found here.

A function (portfolioExpectedReturn) to calculate portfolio expected returns based on historical data

And finally, two functions (simple_optimise_return and optimise_risk_return) to optimise the portfolio for high returns and the risk/return ratio, respectively.

3)Some preliminary notes and some assumptions.

The model is based on parametric VaR, therefore it is assuming that returns are normally distributed like a smooth Bell curve. Furthermore, the VaR is calculated for an holding period of 1 day.

For the sake of simplicity I calculated 99% VaR for each example and ran the optimisation functions for a portfolio of 10 stocks. The optimisation functions operate as follow:

simple_optimise_return yields a portfolio of n stocks with the highest average daily return in the sample while optimise_risk_return yields the 10 stocks with higher average return to standard deviation ratio. The idea behind the second optimisation is that you may want to get the highest return possible for unit of risk that you are bearing. Note that I am assuming that the total invested amount is equally distributed among the 10 stocks (10% of the total amount is invested in each stock).

While keeping in mind that the past usually does not predict with great accuracy the future, this script let me grasp an insight of what could have (possibly) been a good portfolio of stocks in the last years.

A sidenote: since this is just for fun, some data is actually missing and some stocks have longer time series since they were on the market long before than others.

Another sidenote: a great improvement to this script could be the calculation of the advantages (in terms of less risk) of diversification and optimisation for correlation (maximum and minimum correlation for instance)

Now I run the program

And after some computations here is the result that we get:

The results look interesting to me, especially since the VaR of the last portfolio is really low if compared with the first portfolio I used to test the VaR function and the non-weighted return seems pretty good too.

Disclaimer

This article is for educational purpose only. The author is not responsible for any consequence or loss due to inappropriate use. The article may well contain mistakes and errors. The numbers used might not be accurate. You should never use this article for purposes different from the educational one.

![clip_image002[17] clip_image002[17]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgFGpczjjzD1lB0i3Ne1j_cXDohjyyrjrdX8aDVPyBk-I6H7zUVeimtYRA1kKKxa9TRGTLmmM4LPzmaesQispD55gHCzsqGS_f_QMaiUTWHkOvQ1htchV9Q7areJpuKaKWF6V0wc2qBGB8/?imgmax=800)