Please before continue reading, make sure to read the disclaimer at the bottom of this article.

RSA is a well-known cryptosystem used in many cases where secure data transmission is needed. It basically rely on the also well-known issue of factoring big numbers. As you may recall from high school, each number has a unique prime number factorization. This is the very strength of RSA. In order to decrypt a message encrypted with RSA you would need to factorize a REALLY BIG number and this problem may take a very long time to be solved, it may take so long that it becomes unpractical to be achieved.

If you want to get more on RSA click here.

Here is the algorithm carefully described.

I have always been fascinated by encryption and cryptosystems. For those who did not know, Alan Turing, during the Second World War, devised a machine which made it possible to decrypt Enigma code in relatively short time and therefore gain a significant advantage. This machine was basically the first computer. You can watch this incredible piece of history on you tube. Here are some interesting videos:

Numberphile Enigma video

An interesting documentary

Now let’s get to the necessary premises and the code!

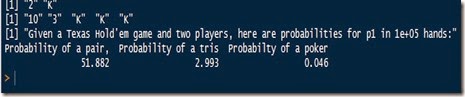

First of all, to start off you need two big primes, p and q. I do not know how they usually get these primes (I haven’t covered it yet) but anyway, they are almost all you need to start your “naive” RSA encrypting system in Python. We will use small numbers for the sake of simplicity and this is basically one of the factors that led me to use the word “naive” since it could be easily broken in a short time.

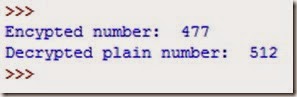

Here is the result which should be printed out:

Therefore, hypothetically, Bob, who needs to send a message (512) to Alice, would use Alice’s public key to encrypt 512 and then send her the number 477. Alice would then use her private key to decrypt 477 and get back 512, the original message.

Hope this was interesting.

Disclaimer: This article is for educational purpose ONLY. If you need a security system you should ask to professionals who are competent in the field. The author of the article is by no means a professional or an expert in this field and might, therefore, make big mistakes. This code must NOT be used for anything other than educational purpose. The provider of this code does not guarantee the accuracy of the results and accepts no liability for any loss or damage that may occur as a result of the use of this code. Understanding and agreeing to the terms of this disclaimer is a condition of use of this code. By reading the article you confirm you have understood and will comply with this disclaimer.